Multimodal Models

In order to run and interact with multimodal models using TransformerLab, you must first download a model from the Model Zoo or import your own model. We currently only support models under the LlavaForConditionalGeneration architecture.

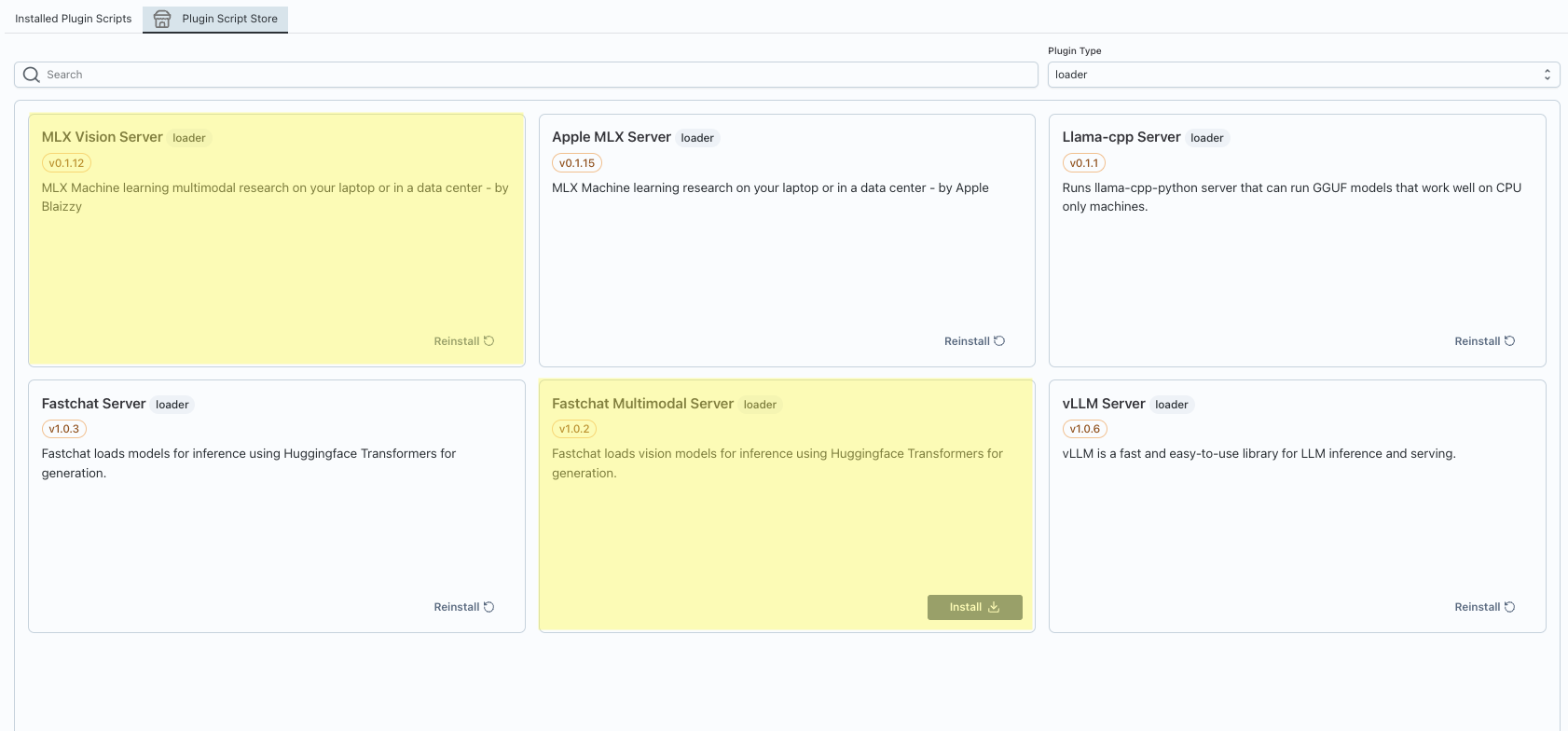

Choosing a Plugin/Inference Engine

Depending on the device you are using, you will want to download a different plugin.

For CPU based machines, please download the MLX Vision Server or the FastChat Multimodal Server.

For GPU-enabled or other compatible machines, please download the FastChat Multimodal Server.

Interact with the Model

After that, you can now load and run your model. Then, you can interact with it just like any other model, but now with images as well!

Training is not supported at this time.

Here is a video explaining the above steps: